Wicked Intelligence 4: AI as Interface

One mental model we used to talk about a lot when Socratus was just starting out was that of the Overton window. We don't use that term so much anymore for reasons great and small. The Overton window is the range of possibilities that feel realizable at a given point in time. Today, we might say that human settlements on the moon are at the very edge of the possible - they are at the boundary of our current Overton window. But 200 years ago, lunar settlements would have been fantasy, not reality. When a new technology comes into being (space flight is a good example, but AI is what we are talking about today), it shifts the Overton window. It changes the possibilities that we feel we can invest in and make real.

But possibilities aren't unique. There can be radically different ways to flesh out the possibilities that the Overton window opens up for us. Today, we will be talking about two very different models of how AI can expand the Overton window. The first one is what we call the 'agency model' of AI. While we will talk about it briefly, that's not our preferred model. We think that the Overton window of AI, as it applies to challenges faced by civil society, is best fleshed out by a model that we call the 'interface model' of AI.

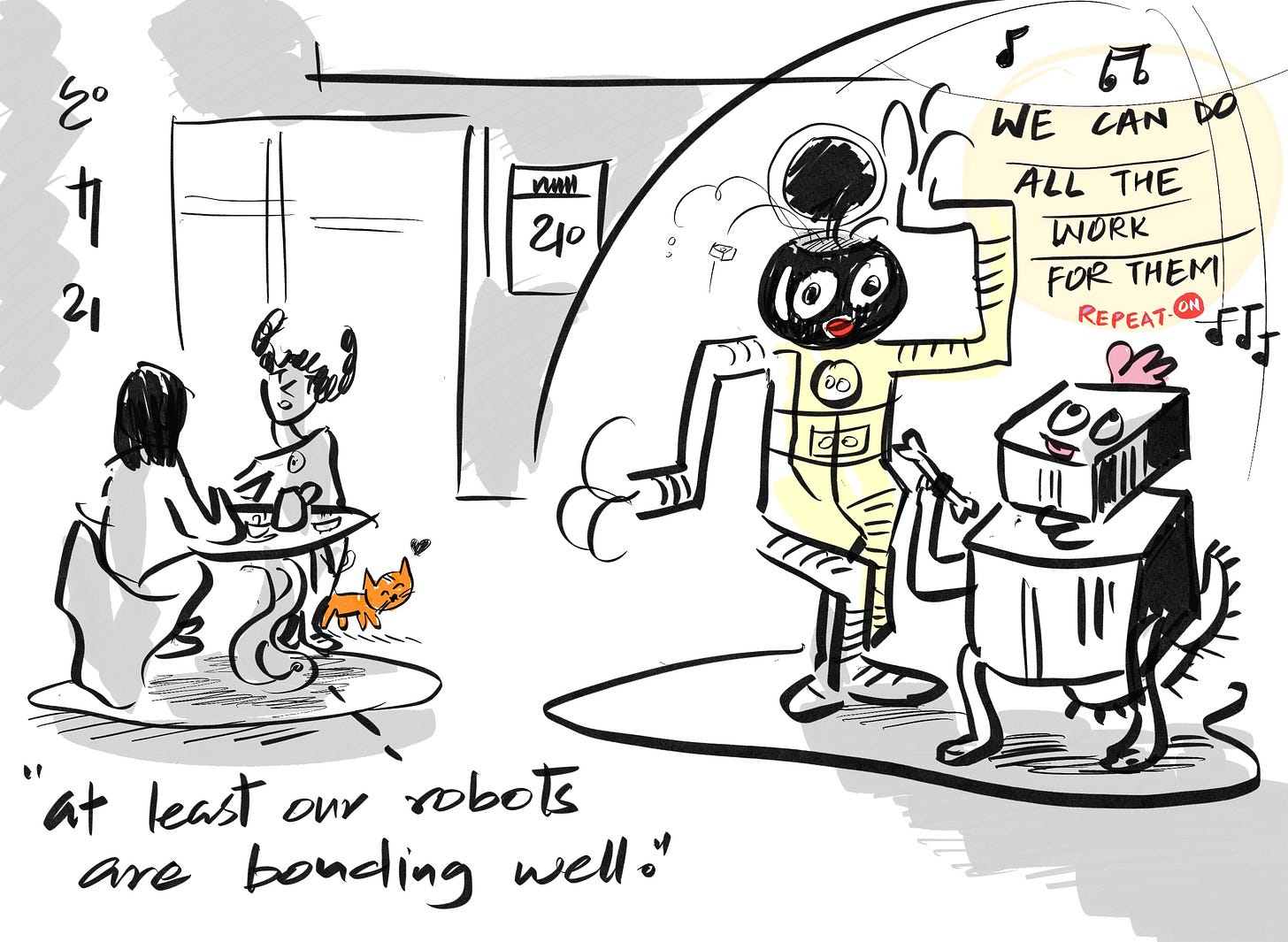

AI as Agent

Most of the time we think of AI as an agent, as an entity that fulfills a certain task for you, such as a legal AI that answers all your questions related to the law for you. So let's say you want to know how to file a complaint at the local police station, the AI might tell you the steps you need to take. What the AI won't tell you is how to get the police officer to take your complaint seriously or not harass you while doing so. However, easy-to-digest information is well within the capacity of generative AI. This is 'AI as agent.' It is this AI that provokes much worry, that it might steal your job; take your agency away and replace it with artificial agency.

The 'AI as agent' story invariably works in the favor of the powerful because, typically, the artificial agent is an extension of their power. It's the replacement of labour by capital, right? When a machine replaces an office worker, it's replacing a human being who does a certain job with a manifestation ('avatar' to use an Indian term) of capital that does the same job but for no pay. So thinking about AI as an agent is often the way that somebody with power who wants to replace human beings with machines wants to think about AI.

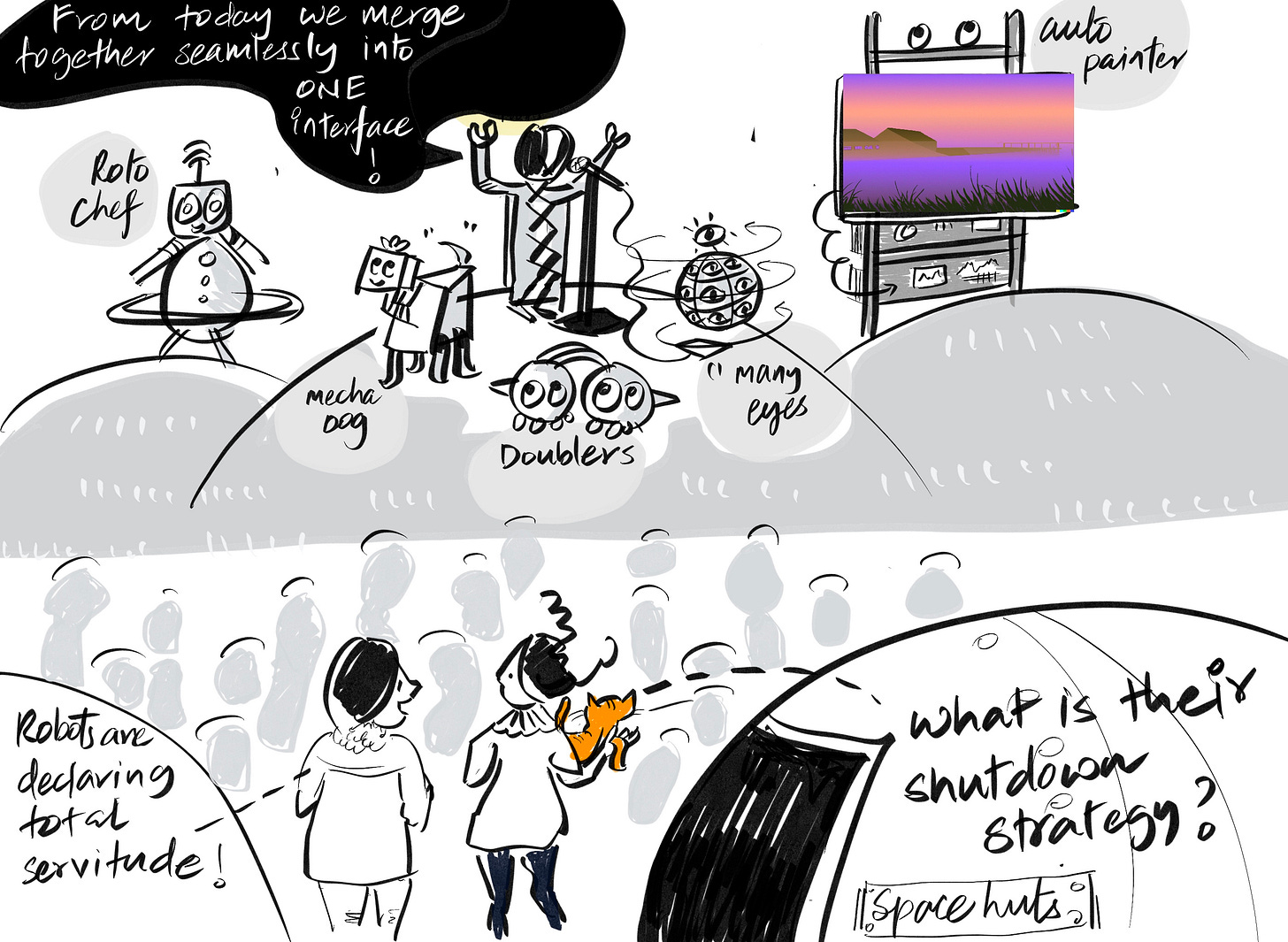

But we can also think about AI differently. We can think of AI as an interface.

AI as Interface

Interfaces have become more important in computing over the last thirty years. Instead of thinking about computing technology as an agent that performs some functions for itself, think about it as an aid for humans to achieve their individual and collective goals. For human purposes, the interface matters more than the inside of the technology, which we often treat as a black box. That's also a problem, and AI is making it worse, but we can usually ignore the inside of the machine when engaging with its interfaces.

Smartphones are the most popular computers ever, and we engage with smartphones through interfaces. We know how to swipe and click and perform other actions that don't care about what's inside the smartphone, however great a technical achievement that might be, and through that swiping and clicking we connect with other humans who are also swiping and clicking at the same time as we are. Human computing is always about the interface and AI is going to be no different. For human purposes, we should think about AI as a new set of interfaces that allows us to perform functions we couldn't perform before.

We want to reiterate the importance of 'allows us to perform functions we couldn't perform before.' In its interfacial avatar, AI doesn't perform tasks that you can also perform; it helps you perform tasks that you couldn't otherwise perform.

A Concrete Example: CEAIN

Remember when we talked about interfaces a few weeks ago? That’s this Messenger:

Wystem 2: On Ecosystem APIs

Don’t like reading? Want to watch instead? We have a video for you: Need something to listen to while going from place A to place B? Forget the video and plug into the audio stream 📻.

Let’s go back to the example we used in that essay:

Imagine an organization, A, focused on maternal health, ensuring that expectant mothers receive the necessary resources for a safe and comfortable delivery. Now consider another organization, B, dedicated to the nutrition of infants and toddlers. How do we design a seamless transition between these two organizations? How can we ensure that the same family smoothly transitions from maternal care provided by organization A to child nutrition support from organization B? What is the most effective handover mechanism?"

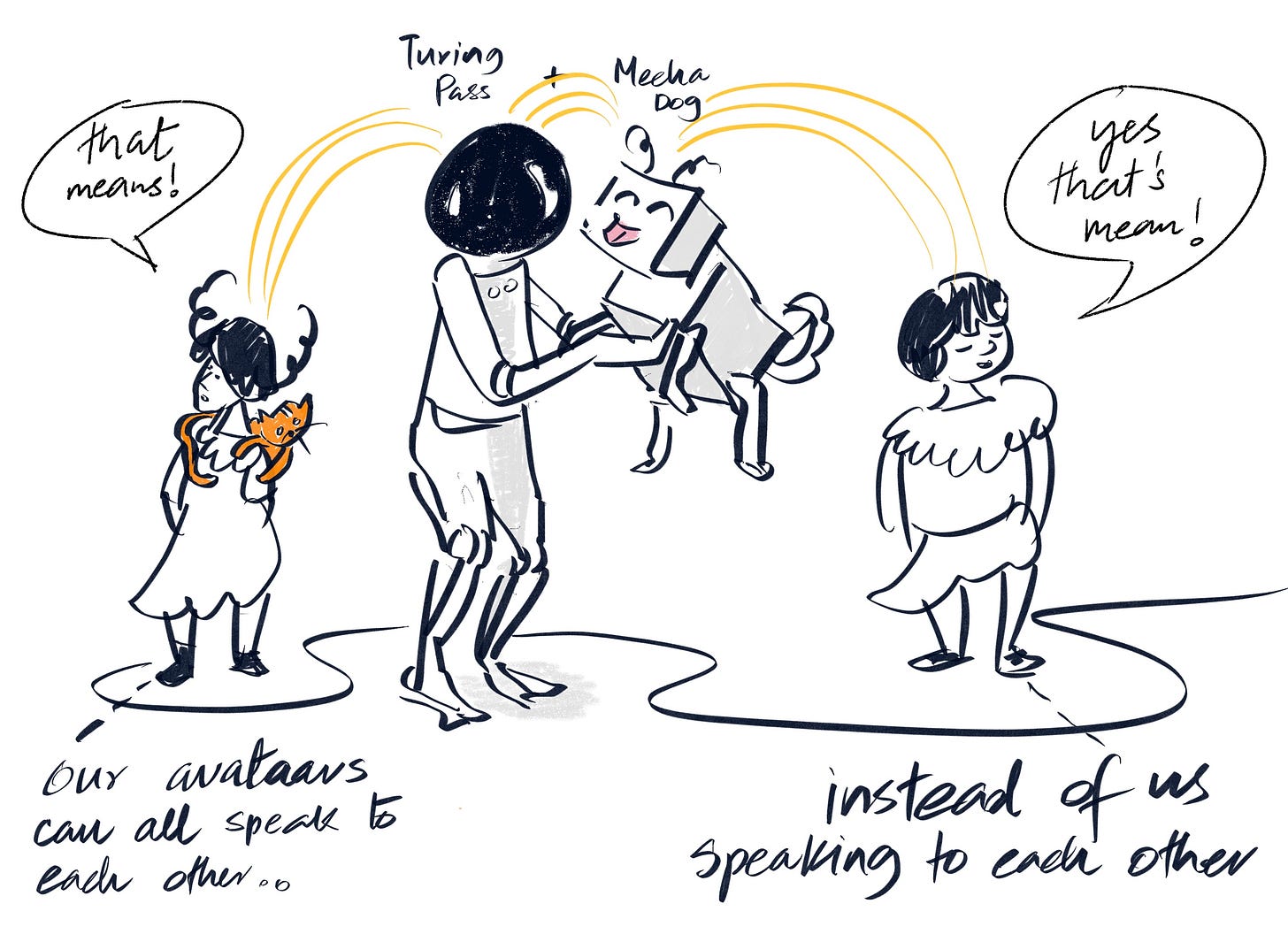

Let’s see how we might enable community health workers (CHWs) bridge the two sides of this divide using AI. CHW-A starts talking to the family, helps the mother get adequate care and prepares the other members of the family for the changes that are coming. In the process they participate in various conversations that are recorded and transcribed by an AI system that creates (or modifies) a health profile.

The key here is that neither the CHW nor the family members have to do anything else besides what they normally do: talk. AI as an interface augments the existing human interactions in the background.

After the child is born and CHW-B takes over, they too continue their normal human interactions with the child and the mother, but the AI helps surface needs as they emerge. Perhaps the conversations reveal the child has symptoms correlated with Vitamin-B deficiency; those can be flagged by the AI backend and a blood test ordered for the next CHW-B visit.

In this imagination, AI systems are a ‘magical interface,’ i.e., computing APIs that perform their tasks in the background while people perform the important caregiving functions in the foreground. We can combine all these interfacial AI systems into a 'Community Empowerment AI Network,' or CEAIN that leverages AI-powered interfaces to create seamless, integrated support systems that connect various civil society organizations, ensuring that services are delivered efficiently and holistically to those in need.

As these mothers transition from pregnancy to early parenthood, CEAIN’s smart interface seamlessly connects their care plan with organization B, dedicated to infant and toddler nutrition, so that CHW-B comes along with CHW-A without any effort on the part of the family. This interface ensures that the family continues to receive personalized support without any disruption, bridging the gap between maternal health and child nutrition.

A few years later, CEAIN connects preschools with primary schools through smart interfaces that track and support each child’s educational journey. Teachers can access a shared platform that provides insights into student progress, learning preferences, and special needs, ensuring a smooth transition and consistent educational support as the child grows.

And so on... you get the point.

Interfaces everywhere

CEAIN uses AI interfaces to connect civil society organizations in a seamless and person-centered way. This network tackles the complex and fragmented nature of the social sector by creating robust AI-powered interfaces that facilitate smooth transitions and foster collaboration. For example, in the context of maternal health and infant nutrition, CEAIN enables Organization A, which focuses on maternal care, to seamlessly pass on relevant information and support plans to Organization B, which is dedicated to child nutrition. This transition is managed through a secure AI-driven platform that tracks the family's progress, ensuring continuity of care and a comprehensive approach to their well-being.

The interfaces within CEAIN are dynamic connectors that go beyond simple data transfer, creating collaborative workspaces where organizations can interact, share insights, and co-design solutions. These interfaces host standardized assessments, track milestones, and provide real-time access to resources, ensuring that each family's needs are fully met. For instance, when Organization A completes its maternal health program, the AI interface ensures all relevant information is smoothly handed over to Organization B, allowing them to take over and provide the necessary nutritional support for the child. This interconnected approach promotes shared responsibility and continuous support, empowering families to navigate critical life stages with confidence.

Additionally, CEAIN’s interfaces foster a culture of empathy and collaboration through initiatives like secondment, where staff from one organization temporarily join another to share expertise and foster deeper understanding. This human-centered exchange ensures that knowledge flows naturally between organizations, breaking down barriers and creating a cohesive support network. The AI interfaces manage these secondments, providing tools for coordination, knowledge transfer, and impact assessment, thus enhancing the overall capacity to address complex social issues. By using AI as a connective tissue within the social sector, CEAIN transforms fragmented efforts into a unified, dynamic ecosystem where innovation and positive change can thrive.

That’s rosy imagination, but surely the AI sun casts a long dark shadow somewhere?

Yes, but what about the downside?

However you look at it, the value of AI to the end user (a community member) as well as intermediate users (CHWs for example) will depend on the depth of profiling that goes on in the background. The better an understanding of your healthcare or educational needs, the better a service it will be. But do we want such profiles to be created? And if they are created, who owns them? Who has access to them and in what circumstances? Seamless transitions are good, and the judicious use of data can go a long way to fill any gaps in existing healthcare, education and other public systems, but how can we even propose something like CEAIN without addressing the elephant in the room:

Isn’t this a massive breach of privacy waiting to happen? Doesn’t this lend itself to surveillance at an unprecedented scale?

The honest answer is: yes and yes. We would be extremely reluctant to let private (or even governmental) actors collect data at this scale and make that data available to machine learning systems, unless our privacy is guaranteed by design and not via unverifiable promises. In a related context, it’s been said:

The same apparatus that enables unprecedented connectivity enables unprecedented surveillance.

Such systems are invasive by design, recording and storing every digital transaction from an online purchase to chatbot queries to uploaded photos in giant databases that are searchable, not least by snooping governments, aggressive marketers and the large language models of Big Tech.

We turn to those issues - at the intersection of AI, privacy, identity and justice - in the last and final ‘Messenger on AI’ next week.